By Inès Najeh / GICJ

As new technologies evolve, individuals’ Human Rights do so in parallel. Information and Communication Technologies (ICTs) are nowadays completely part of our lives as they include internet use in general, chatting with friends online, education, etc. Within ICTs, we speak of emerging technologies, which are expected to significantly impact various sectors of society and everyday life. These technologies often have the potential to revolutionise existing processes, create new opportunities and address existing challenges. According to the “Top 10 Emerging Technologies of 2023’s report” of the World Economic Forum (WEF), emerging technologies can be characterised by key features such as their:

- Novelty: the technology is emerging and at an early stage of incipient development, not already widely used.

- Applicability: the technology has the potential to be of significant use and benefit to societies and economies in the future; is not of only marginal concern.

- Depth: it is being developed by more than one company and is the focus of increasing investment interest and excitement within the expert community

- Power: is potentially powerful and disruptive in altering established ways and industries.”[1]

An example of emerging technologies is Artificial Intelligence (AI), which is now consistently used in our everyday lives. Its use has almost become the norm, especially among the younger generation. AI can be defined as the ability of a machine to perform tasks that can simulate human intelligence, such as analytical abilities, planning, creativity, or reasoning. AI is continuously advancing, enhancing various aspects of our daily lives by providing smart solutions and increasing efficiency. Its rapid evolution brings significant benefits, such as improved healthcare, smarter home devices, and connectivity. However, this fast progress often outpaces the ability to thoroughly study and understand the potential consequences, leading to ethical use, data privacy, and security challenges. As AI technologies become more integrated into society, balancing innovation with careful consideration of their long-term impacts, especially regarding human rights is going to become crucial.

During the World Standards Cooperation, on 24 February 2023, the United Nations High Commission for Human Rights, Volker Türk, stated that it is crucial to “incorporate the common language of human rights into the way we regulate, manage, design, and use new and emerging technologies”[2]. In 2019, at the AI for Good Global Summit, the UN Secretary General Antonio Guterres stated that in order to “harness the benefits of AI and address the risks,'' everyone needs to collaborate, including government, civil society, corporations, etc, to create effective frameworks and harness technologies “for the common good”.[3]

On 30 November 2023, Volker Türk gave another statement on the issue of AI and emerging technologies where he emphasised that, on the one hand, AI has the potential to transform our lives and on the other hand, it also poses important risks to human rights. As such, he reiterated the importance of integrating human rights into AI development by working with government and corporations “to establish effective risk management frameworks and operational guardrails”[4].

What has been done?

Nowadays, it seems more appropriate to use the term “digital rights” when referring to basic human rights and freedoms that apply to the online world, including privacy, freedom of expression, access to information, data protection, and non-discrimination. Human rights need to be applied offline and online as new technologies can be used to violate them. For example, the UN considers that even if Human Rights provided by the United Nations Convention on Rights of the Child (UNCRC) in November 1989 were “formulated in the pre-digital era, the rights enshrined therein remained as relevant as ever”. This phrase formulated in a report of 2014 concerning “digital media and children’s rights'' by the Committee on the Rights of the Child interpreted the Convention and other international texts regarding children’s Human Rights as living instruments evolving with our society and interpreted in light of new technologies[5].

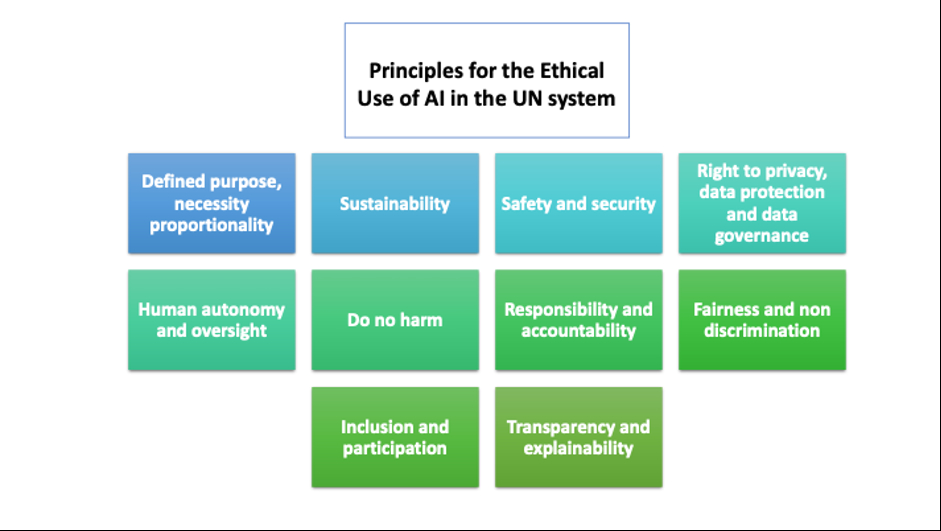

To ensure sustainability and effective protection of human rights, it is necessary to put into place a digital rights framework. It seems that the work of the Organisation for Economic Cooperation and Development (OECD) and the UN Guiding Principles (UNGPs) can be used as a foundation regarding the responsibility of corporations and States’ when it comes to digital technologies. However, these frameworks are insufficient to combat the misuse and violation of AI and emerging technologies by States or corporations. For this reason, the Office of the UN High Commission for Human Rights (OHCHR) released in 2023 the “B-Tech Taxonomy of Generative AI Human Rights harms”, which examines human rights risks associated with the creation, implementation, and utilisation of AI technology and list potential risks to these rights. Moreover, UNESCO created the “first-ever global standard on AI ethics – the ‘Recommendation on the Ethics of Artificial Intelligence’ in November 2021. This framework was adopted by all 193 Member States''[6]. Based on these recommendations, UNESCO came up with 10 Principles for the Ethical Use of Artificial Intelligence in the United Nations Systems meant to ensure a safe, ethical and human rights respectful development of AI and emerging technologies:

Finally, on 21 March 2023, the UN General Assembly adopted its first resolution A/78/L.49 on seizing the opportunities of safe, secure and trustworthy artificial intelligence systems for sustainable development. While non-binding, the adoption marks a significant step toward achieving safety and represents a strong commitment to govern AI and ensure that fundamental rights and liberty are respected. Indeed, the resolution emphasised that “The same rights that people have offline must also be protected online, including throughout the life cycle of artificial intelligence systems”.

Wide recognition of the need for AI governance

Numerous organisations within the UN system and beyond have examined the impact of emerging technologies like artificial intelligence (AI) on human rights, particularly emphasising the necessity of robust governance. Governance is essential to ensure that technological advancements are implemented safely and do not infringe upon human rights and freedoms. Effective regulation of these technologies is crucial to maintain transparent decision-making processes and hold the creators accountable for their innovations.

The goal to achieve the 17 Sustainable Development Goals (SDGs) by 2030 remains a significant challenge and is currently off track. Nevertheless, the UN General Assembly has acknowledged the potential of AI and other emerging technologies to speed up progress towards these goals. This idea was reiterated by the UN Deputy Secretary-General during the Opening of the ECOSOC Special Meeting, where she highlighted the need to harness "this powerful technology to accelerate sustainable development while mitigating its harms (…) To do this, we must ensure that AI is effectively governed, that it is equitable, accessible, and ethical”[7]. Ensuring proper governance of AI and similar technologies is about preventing harm and promoting fairness, accessibility, and ethical standards. Such governance frameworks are indispensable for fostering innovation that aligns with human rights and contributes positively to society. By regulating the development of AI, it will be possible to enhance accountability, ensure equitable access, and uphold ethical practices, thereby leveraging technology's full potential to advance sustainable development.

Moreover, the AI for Good Global Summit is an initiative promoting the use of AI to advance multiple issues such as health, climate, gender, and sustainable development and showcases new technologies such as AI robots. It is organised by the International Telecommunication Union (ITU) in partnership with other UN agencies. This year, the Summit was held in Geneva from 30 May to 31 May 2024, gathering thousands of people from around the globe, including scientists, academics, and experts, to bring solutions to multiple AI-related issues [8].

The AI for Good Summit was focused on the importance of governance as AI is moving at an exponential speed, making itself even smarter and more efficient. Indeed, it has become clear that misinformation, bots, internet-related crimes, etc, are a danger to the protection of human rights and freedoms. During a meeting on the AI Dilemma, the Co-founder of the Center for Humane Technology emphasised the need for the international community to implement governance tools to match the speed of technologies and develop policies and frameworks to hold accountable developers of AI and other types of technologies. It would have been quite interesting if the AI for Good Summit had held a discussion with human rights experts in the digital field to fully comprehend why governance is necessary and the depth of AI and technologies' impacts on individuals’ rights.

The AI for Good Summit was focused on the importance of governance as AI is moving at an exponential speed, making itself even smarter and more efficient. Indeed, it has become clear that misinformation, bots, internet-related crimes, etc, are a danger to the protection of human rights and freedoms. During a meeting on the AI Dilemma, the Co-founder of the Center for Humane Technology emphasised the need for the international community to implement governance tools to match the speed of technologies and develop policies and frameworks to hold accountable developers of AI and other types of technologies. It would have been quite interesting if the AI for Good Summit had held a discussion with human rights experts in the digital field to fully comprehend why governance is necessary and the depth of AI and technologies' impacts on individuals’ rights.

AI can accelerate progress towards the SDGs, enhance decision-making, and drive innovation, when applied safely. Once again, it is important to recognise that AI and emerging technologies can significantly impact a wide range of human rights. For example, they can influence the right to education and the right to non-discrimination, among others, both positively and negatively. Issues such as disinformation and the misuse of bots highlight the potential for harm if these technologies are not properly regulated.

Accessibility to the right to education

The establishment of the United Nations Sustainable Development Goals (UNSDGs), 2015 has caused an urge for international organisations and Member States to create new solutions to ensure that the right to education is respected and accessible for everyone, especially children.

The UN understands that the interpretation of the right to education needs to adapt in parallel to new technologies to allow children to develop their abilities to their fullest potential. For example, the rise of new technologies and AI is being used to ensure the achievement of SDGs, notably by the International Communications Union (ITU).[9] According to the ITU, AI can be an effective way to improve the right to education especially in developing countries. It is now a way to provide access to a large amount of information and resources to help children learn and understand subjects seen in classes or for school projects. However, the implementation of this solution (AI) into national legislation can be complicated, especially because of all the dangers that AI represents.

Article 28 of the UNCRC protects the right to education, which provides that the State must “encourage the development of different forms of secondary education (...) on the basis of equal opportunity”. This goal can be achieved by linking this human right with the notion of “media literacy”, a skill every child should be able to use in the long term. This skill can be defined as being able to apply critical thinking to media and being able to create its own opinion on different matters. This method is becoming more popular as the Council of Europe Guide to Human Rights for Internet Users explains that through this method and access to digital education and knowledge on the internet, children can understand and exercise their Human Rights and freedoms online and offline. With new technologies, which are always expanding, the education sector obtains better learning opportunities for children. This can be seen through the fact that nowadays, many countries allow schools to use tablets in class to work more efficiently and allow kids to learn in a way they may find more entertaining and a lot of students get assigned homework to do online on specific websites or even one created by the State. The Internet continues to be a great resource as it contains a multitude of information that helps children better understand class subjects and school projects while making learning easier thanks to summaries, eBooks, etc."

Furthermore, Article 29 of the UNCRC is quite important as it largely protects the child's rights. For example, the use of the internet and communication tools by minors can help them obtain information about their health, their rights, and the news (63% of 15-year-olds use the internet to read the news at least once a week). Article 29 (a) states that children’s personalities, talents, and mental and physical abilities must meet their fullest potential, notably by accessing ICTs. The respect of this human right can be seen by minors using new technologies to learn about their health issues, whether it is regarding mental or physical health, or to develop their sexuality and identity and build their confidence. In fact, by learning how to share their thoughts online, children can build more confidence and learn about different perspectives and opinions, which prepares them for a “responsible life in a free society” (Article 29 (d)). Moreover, the digital environment can guarantee the right to freedom of expression protected by Article 13 of the UNCRC as it allows them to voice their opinions on subjects that matter to them and directly implicate them. This is what children worldwide explain in “Our Rights in a Digital World".

The right to equality, non-discrimination and dignity

Article 1 of the Universal Declaration of Human Rights (UDHR) states, "All human beings are born free and equal in dignity and rights." Article 2 declares, "Everyone is entitled to all the rights and freedoms set forth in this Declaration, without distinction of any kind." While AI and emerging technologies have the potential to advance these principles, they also pose significant risks to equality and non-discrimination. For instance, AI technologies can be harmful primarily through biassed algorithms and profiling systems. These technologies can reinforce and amplify existing societal biases, leading to discriminatory outcomes. Facial recognition systems often exhibit higher error rates for people of colour, and profiling tools used in law enforcement or immigration control can disproportionately target marginalised communities. Additionally, automated bots and decision-making systems, if not properly regulated, can perpetuate and institutionalise bias, undermining efforts to achieve equality. The risks for AI to discriminate highlights the urgent need for robust frameworks and oversight to ensure these technologies do not violate human rights.

Furthermore, bots and disinformation can significantly undermine the right to non-discrimination and equality by spreading biased narratives and reinforcing harmful stereotypes. These automated systems can amplify discriminatory messages and misinformation, targeting specific groups based on race, gender, religion, or other characteristics, thereby exacerbating social divisions. In addition, disinformation can manipulate public perception and influence decision-making processes, often to the detriment of marginalised communities.

On the positive side, AI can enhance connectivity, providing greater access to information and communication, which supports social inclusion.

For children the right to non-discrimination is mainly protected by Article 2 of the UNCRC. On 24 March 2021 the UN committee on the Rights of the Child adopted a general observation on the impact of new technologies and the digital environment on the rights of the child and the new forms of protection to be developed to better protect them. This observation indicates that the discrimination against handicapped children can be reduced by the digital environment as it creates more opportunities to establish new social relationships with children of their own age, they can now attend classes online if they are unable to move, etc

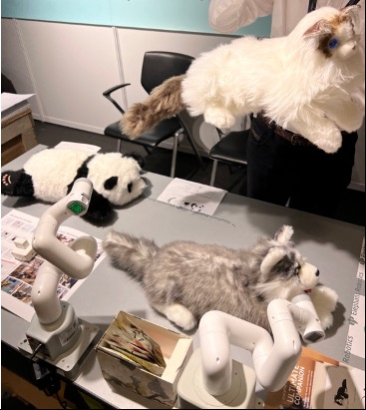

Additionally, AI and emerging technologies in healthcare can improve the quality of life for individuals with chronic illnesses, enhancing their right to dignity by enabling better disease management and personal autonomy. Present at the AI for Good Global Summit, one of Elephant Robotics’ goals by creating metaCat and metaDog was to enable people with disabilities or the eledery to have a companion with extremely realistic robots that simulate sound and heartbeats of a real animal.

Additionally, AI and emerging technologies in healthcare can improve the quality of life for individuals with chronic illnesses, enhancing their right to dignity by enabling better disease management and personal autonomy. Present at the AI for Good Global Summit, one of Elephant Robotics’ goals by creating metaCat and metaDog was to enable people with disabilities or the eledery to have a companion with extremely realistic robots that simulate sound and heartbeats of a real animal.

The Geneva International Centre for Justice (GICJ) emphasises the crucial need for robust governance with Artificial Intelligence (AI). GICJ advocates for the effective and sustainable implementation of human rights principles within AI policies and frameworks. We underscore that all States are responsible for protecting individuals from human rights abuses induced by AI and emerging technologies. To achieve this, states must align their regulatory frameworks with their obligations under international human rights law. GICJ calls for comprehensive international cooperation to ensure that AI advancements do not undermine human rights, but rather contribute to their promotion and protection.

[1] https://www.weforum.org/publications/top-10-emerging-technologies-of-2023/#:~:text=The%20Top%2010%20Emerging%20Technologies,their%20associated%20risks%20and%20opportunities

[2] https://www.ohchr.org/en/statements/2023/02/turk-addresses-world-standards-cooperation-meeting-human-rights-and-digital

[3] https://www.un.org/sg/en/content/sg/statement/2019-05-28/secretary-generals-message-for-third-artificial-intelligence-for-good-summit

[4] https://www.ohchr.org/en/statements-and-speeches/2023/11/turk-calls-attentive-governance-artificial-intelligence-risks Generative Artificial Intelligence and Human Rights Summit

[5] https://www.ohchr.org/sites/default/files/Documents/HRBodies/CRC/Discussions/2014/DGD_report.pdf

[6] https://www.unesco.org/en/artificial-intelligence/recommendation-ethics

[7] https://press.un.org/en/2024/dsgsm1905.doc.htm

[8] https://aiforgood.itu.int/summit24/programme/#day1

[9] https://www.itu.int/en/ITU- T/AI/Pages/default.aspx